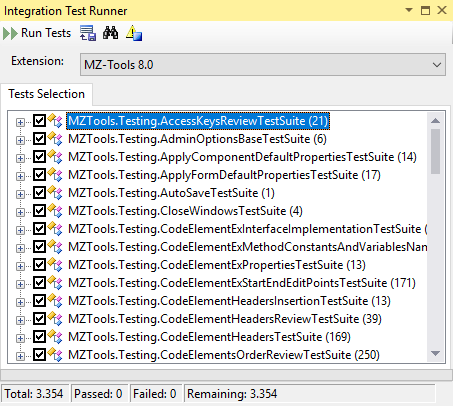

I started with automated tests for my MZ-Tools extension early in the development of version 7.0 (then an add-in, not a package), ten years ago, around the year 2008 or so. At that time Visual Studio 2008 provided a Visual Studio TestHost dll (even with source code, if I remember correctly) to run automated tests of Visual Studio packages using the own Visual Studio. I remember that it was so painful to use that approach (crashes, hangings, etc.) that after months of investment I threw all the stuff and started to build my own test framework infrastructure and test runner:

(yep, I have 3,354 automated integration tests for my extension for Visual Studio)

It was perfect in most aspects because I owned the code and could adapt it to my needs but it had one important inconvenient: it didn’t allow Continuous Integration (CI). Instead, I ran the tests manually before each (monthly) build. It had another inconvenient: I learned automated testing by myself without formal training and I ended with tons of integration tests (which use the real Visual Studio to host the extension), no system tests (that would mock Visual Studio) and no unit tests (that just test a single method or class). I know now that, for performance reasons, it is much better to have have a pyramid with tons of unit tests, lots of system tests and few integration tests.

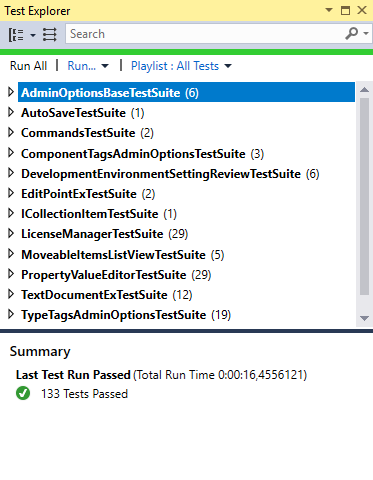

Last year I started to focus on DevOps (even if I am a solo developer) and continuous integration, and decided to make my test framework infrastructure compatible with VS Test / MSTest, so I could keep using my own test runner for integration tests but use them 100% as system tests mocking Visual Studio with stubs:

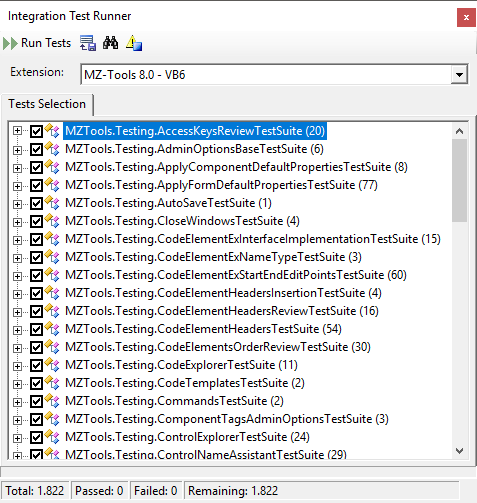

As you can see, my progress is quite modest at this point (only 133 system tests vs 3,354 integration tests) because I need to mock the full EnvDTE.FileCodeModel for most tests. But it is a feasible approach because I have fully mocked with stubs the whole VBA / VB6 IDEs that I use for MZ-Tools 8.0 for VBA / VB6. So, for example I have 1,822 integration tests for VB6 with my own test runner (an add-in for VB6):

As you can see, my progress is quite modest at this point (only 133 system tests vs 3,354 integration tests) because I need to mock the full EnvDTE.FileCodeModel for most tests. But it is a feasible approach because I have fully mocked with stubs the whole VBA / VB6 IDEs that I use for MZ-Tools 8.0 for VBA / VB6. So, for example I have 1,822 integration tests for VB6 with my own test runner (an add-in for VB6):

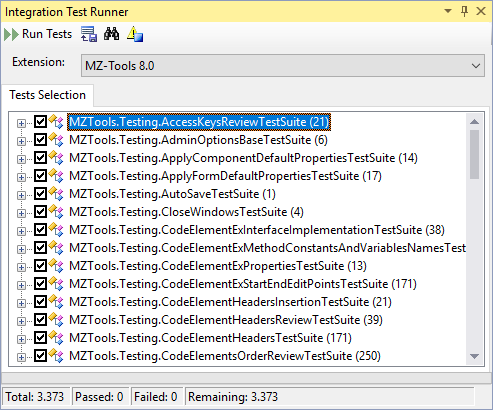

And the same 1,822 tests as system tests using the test runner of Visual Studio:

And the same 1,822 tests as system tests using the test runner of Visual Studio:

Once I finish the system tests for Visual Studio, the next step is to give another try to run the integration tests using the test runner of Visual Studio, rather than my own test runner, which will allow me Continuous Integration not only for system tests, but also for integration tests. For such approach, I learned in the Two videos about building High Performance Extensions by Omer Raviv about the Microsoft/VisualStudio-TestHost project on GitHub. I have still to read and learn about it, but I think it is based on the code of the VS TestHost of 2008 and hopefully these 10 years have been used to solve the problems that caused me to abandon that approach.

Once I finish the system tests for Visual Studio, the next step is to give another try to run the integration tests using the test runner of Visual Studio, rather than my own test runner, which will allow me Continuous Integration not only for system tests, but also for integration tests. For such approach, I learned in the Two videos about building High Performance Extensions by Omer Raviv about the Microsoft/VisualStudio-TestHost project on GitHub. I have still to read and learn about it, but I think it is based on the code of the VS TestHost of 2008 and hopefully these 10 years have been used to solve the problems that caused me to abandon that approach.

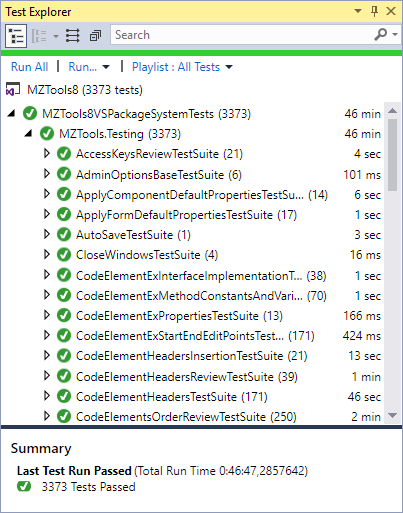

Update (July 29, 2018): yesterday I finished the last stubs for Visual Studio and now all the integration tests for the real Visual Studio are also system tests with stubs that simulate the behavior of Visual Studio:

Thank you for the awesome blog post! And thanks for the mention 🙂

I do totally believe that you should a *ton* more unit tests for your VS extensions than system and UI based tests. It is interesting to note that even some folks at Microsoft have been on this same journey where they first struggled to find the right balance – https://www.youtube.com/watch?v=Lprj_4Wpi2s talks about how the VSTS team put in significant effort to convert an integration suite that was *mostly* integration tests to *mostly* fast-running unit tests. It took them several years, but the effort paid off in spades.

One thing I skipped over in my video for lack of time, is that when you’re writing integration or system tests, it’s initially pretty hard to avoid writing flaky tests. Having a flaky test is a lot worse than having no test at all. In a team environment, a flaky test is like a virus – it’s a thought virus that will easily infect your team – the underlying thought being “our tests are a pain in the neck and not even worth the effort, because when a test is failing it doesn’t necessarily mean that something actually went wrong”.

The main reason it’s hard not to write flakey tests is that a big chunk of the VS Extensibility API is very particular about whether it’s being invoked on the STA thread or an MTA thread, and will fail on you in very random and surprising ways if you get it wrong. The solution to that problem we have in the OzCode Visual Studio integration test framework is we leverage BDDfy’s StepExecutor. In BDDfy, each declarative part of the test (the “Given… When… Then… parts) is called a “Step”. A StepExecutor allows you to control how exactly the step is executed, and add custom logic before and/or after the step is run. We use this to make sure that while the test is running an MTA thread, each Step is running on the STA thread, and we make sure to wait until the VS UI thread goes idle before executing the next step over.

The implementation of this technique is actually only 20 lines of code (see https://github.com/oz-code/BDDfyVSIntegrationTestSample/blob/7bfcd111e591abf06b7e4aabfd2a7b4740f3ca4d/src/BDDfyConfiguration.cs#L21 ), but it is a complete game-changer in terms of being able to writing integration tests for VS extension that are completely deterministic.

How do you install VS Test Host, I can’t find any documentation, this is infuriating. Thanks for the post though

Don’t worry now I see what I was missing